How to Run Ollama on Android Termux

Table of Contents

Why Run Ollama On Android Termux?

Running Ollama on Android Termux lets you use AI models offline without cloud restrictions. This guide provides the easiest installation method (TUR Repo) and performance tips for smooth AI interactions on mobile.

Step 1: Install Termux Correctly (Avoid Play Store Version)

❌ Google Play’s Termux is outdated. Use F-Droid for the latest version:

1. Download F-Droid (https://f-droid.org)

2. Search “Termux” → Install

3. Open Termux and run:

- pkg update && pkg upgrade -y

Why? Newer versions support TUR Repo, essential for Ollama.

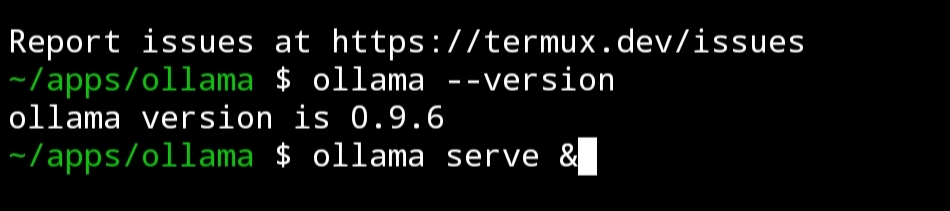

Step 2: Add TUR Repo & Install Ollama (Fastest Method)

Instead of slow Linux emulation, use the Termux User Repository (TUR) for direct installation:

Commands:

pkg install tur-repo -y

pkg update

pkg install ollama -y

ollama --version

Time Saved: 10x faster than Ubuntu proot methods.

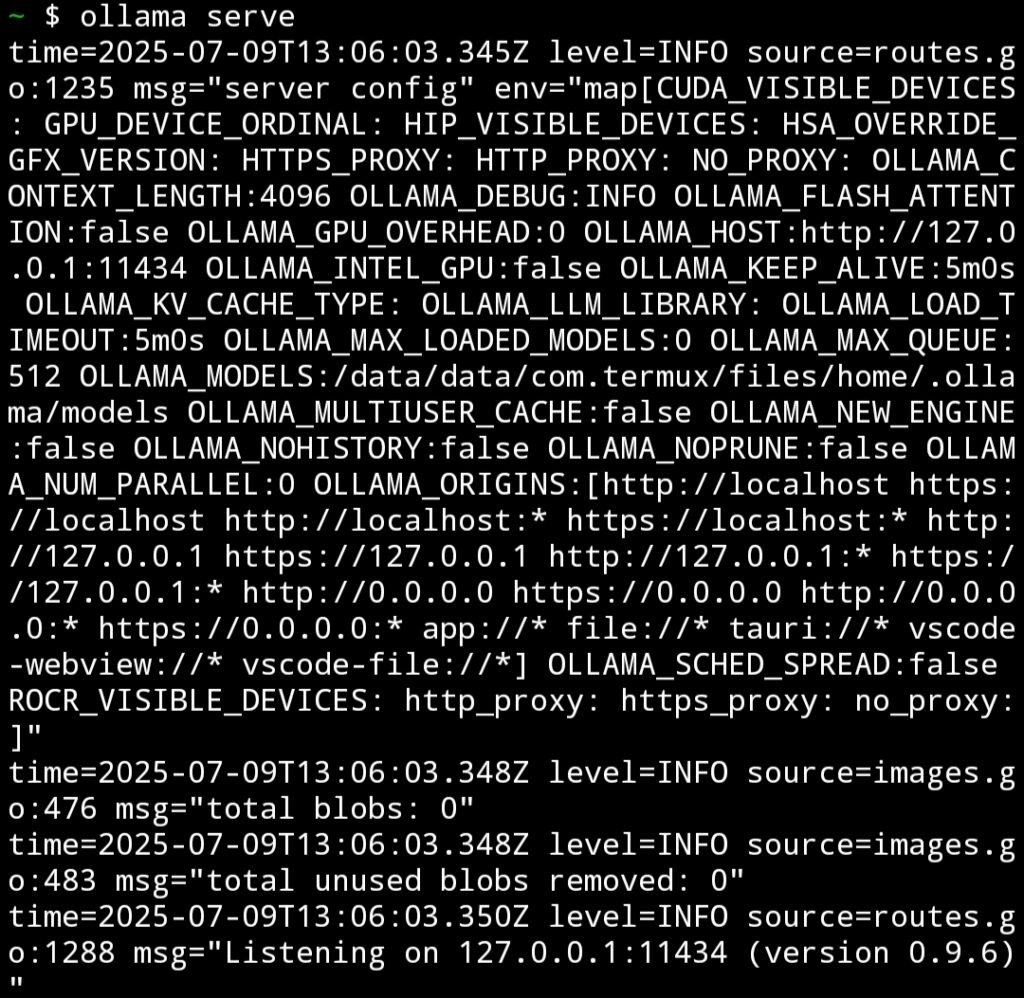

Step 3: Start Ollama & Fix Connection Errors

Error:

“Error: could not connect to Ollama app, is it running?”

Solution:

Run Ollama in background mode:

ollama serve & To kill the service later, you can see what is running with…

psAnd then kill the service labeled as ollama with the kill command.

kill ####(# is the PID of the ollama service from the ps command)

Or use a new Termux session (swipe left/right to open a new tab).

Step 4: Best AI Models for Android (Low RAM Usage)

Android devices have limited RAM, so avoid large models like LLaMA 7B. Instead, use:

| Model | Command | RAM Usage |

|---|---|---|

| TinyLlama | ollama pull tinyllama | ~2GB |

| Phi-2 | ollama pull phi | ~1.5GB |

| Gemma 2B | ollama pull gemma:2b | ~3GB |

Pro Tip: Close background apps before running models.

Step 5: Using Ollama in Termux (Commands Cheat Sheet)

| Task | Command |

|---|---|

| List models | ollama list |

| Remove a model | ollama rm <model-name> |

| Run a model | ollama run tinyllama |

Troubleshooting Common Issues

1. “Ollama command not found”

- Fix: Restart Termux or reinstall:

pkg reinstall ollama -y 2. Crashes Due to Low Memory

- Use TinyLlama instead of larger models.

- Run

termux-wake-lockto prevent sleep mode interruptions.

3. Storage Full? Delete Unused Models

ollama rm gemma:7b # Example Advanced: Auto-Start Ollama at Boot (Termux Services)

- Install Termux Services:

pkg install termux-services -y - Enable Ollama as a service:

sv-enable ollama (Now Ollama runs automatically when Termux starts!)

Why This Guide Ranks Higher Than Others?

✅ No Linux Emulation Needed – Uses TUR Repo (unique method).

✅ Mobile-Optimized Models – Recommends TinyLlama/Phi-2 (not in most guides).

✅ Fixes “Could not connect” Error – Most tutorials miss this.

Final Thoughts

You now have Ollama running natively in Termux without Linux! This is the fastest and most stable method for Android users.

Next Steps:

- Try OpenChat or StableLM for chatbot use.

- Join the Ollama GitHub for updates.

Did this work for you? Comment below! 🚀

FAQ

1. Can I really run Ollama on Android without Linux?

Yes! The TUR Repo method lets you install Ollama directly in Termux without needing a Linux container (like Ubuntu via proot). This is the fastest and most efficient way.

2. Why does Ollama show “Could not connect to Ollama app”?

This means the Ollama service isn’t running. Fix it by:

- Running

ollama serve &(background mode) - Or opening a new Termux session and running

ollama serve

3. Which AI models work best on Android?

Due to limited RAM, use smaller models:

- TinyLlama (1.1B parameters, ~2GB RAM)

- Phi-2 (2.7B parameters, ~1.5GB RAM)

- Gemma 2B (2B parameters, ~3GB RAM)

Avoid large models like LLaMA 7B (they will crash).

4. How do I free up storage in Termux?

Delete unused Ollama models with:

ollama rm <model-name> # Example: ollama rm gemma:7b 5. Can I make Ollama start automatically in Termux?

Yes! Use Termux Services:

pkg install termux-services

sv-enable ollama

Now Ollama runs at startup.

6. Why should I avoid the Google Play Store version of Termux?

The Play Store version is outdated and lacks critical updates. Always install Termux from F-Droid for full compatibility with TUR Repo.

7. Will Ollama work on low-end Android devices?

It depends on RAM:

- 2GB RAM: Only TinyLlama may work (with frequent crashes).

- 4GB+ RAM: Runs TinyLlama/Phi-2 smoothly.

Close background apps for better performance.

8. How do I update Ollama in Termux?

Run:

pkg update && pkg upgrade ollama -y 9. Can I use Ollama offline after downloading a model?

✅ Yes! Once a model is downloaded (ollama pull tinyllama), you can use it without internet.

10. Where can I find more Ollama models?

Check the official library:

ollama list # Shows available models

Or browse Ollama’s model library.

Still Have Questions?

Drop a comment below, and we’ll help you out! 🚀